How to quickly obtain the best parameters for AI training

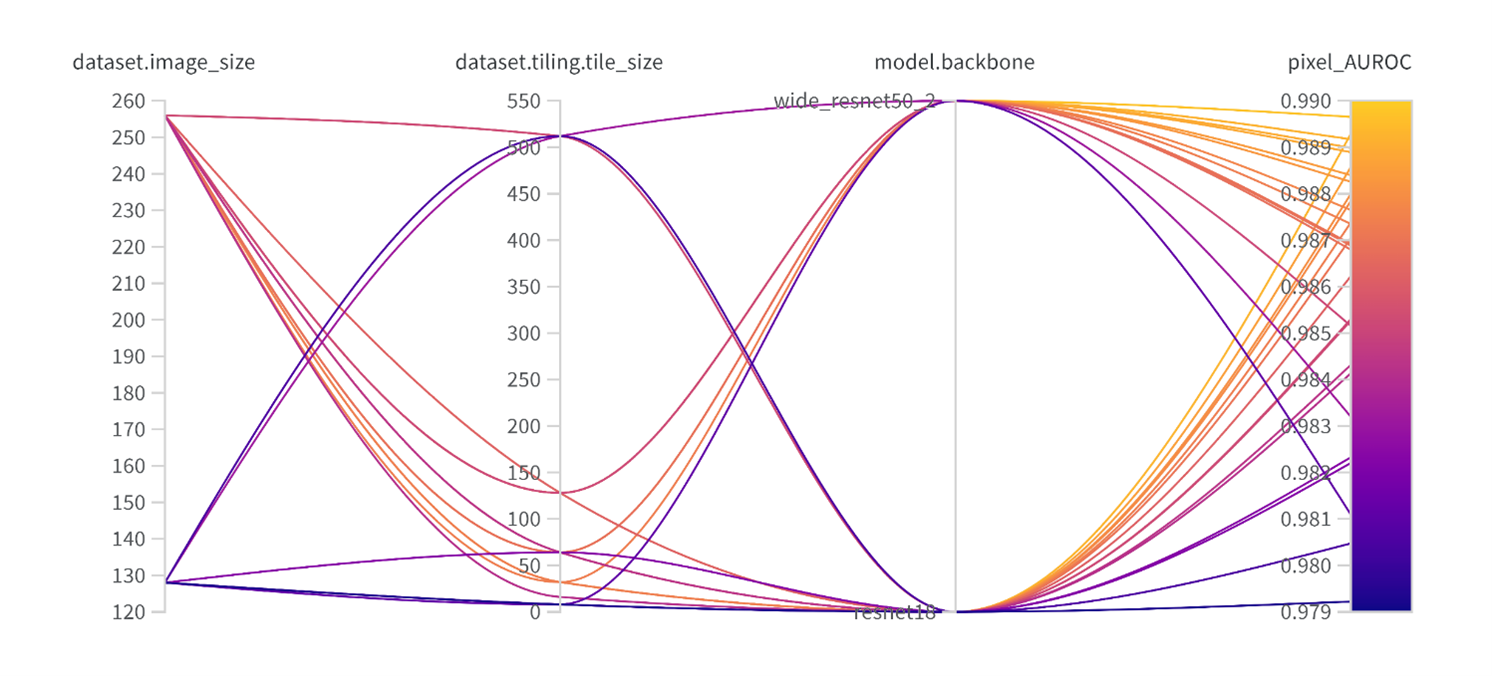

Generally, deep learning uses many hyperparameters to tune neural networks during the training process, such as image size, learning rate, number of learning iterations, etc. Even in terms of data amplification, many parameters need to be adjusted. People often spend a lot of time adjusting different parameters to find better training results, because every parameter may have an impact on the final result. However, the various parameter times of the modulation attempts do not require manpower to monitor them. The correlation of each parameter to the results should be statistically obtained after the overall experiment is completed. Just use the batch and step-by-step execution program to quickly test a large number of Parameter optimization results. Therefore, we found a sample program for hyperparameter optimization and tuning on the Internet, which can quickly test the adaptability and relevance of most parameters to the application scenario. We can find the appropriate parameters in a very short time and generate the most effective model.